Modality-Independent Disentangled Neural Architecture for Enhanced Artificial Intelligence in Electronic Information Systems

Keywords:

Disentangled Representation Learning, Multimodal Transformers, Adversarial Training, Cross-modal AttentionAbstract

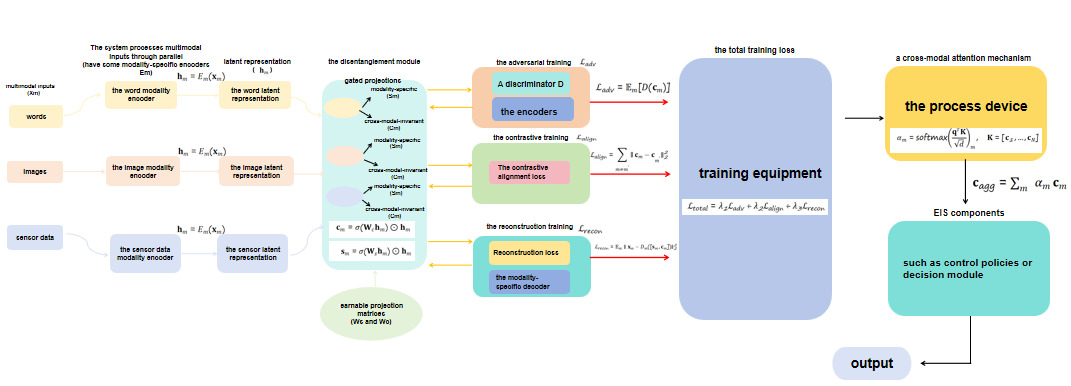

We propose a modality-independent disentangled neural architecture to enhance artificial intelligence in electronic information systems (EIS) by addressing the challenges of processing heterogeneous data modalities while preserving domain-invariant features. The proposed method introduces a dual-encoder framework where each modality is processed by a dedicated Transformer-based encoder, enabling tailored feature extraction for diverse inputs such as text, images, and sensor data. A disentanglement module then decomposes these features into modality-specific and cross-modal-invariant components through a gated mechanism, which is further refined via adversarial training to suppress domain-specific artifacts. Moreover, a contrastive alignment loss ensures consistency across modalities by minimizing the distance between invariant features of paired samples. During inference, a cross-modal attention mechanism dynamically aggregates these features, allowing adaptive integration with downstream EIS components such as control algorithms or decision modules. The architecture replaces conventional feature extraction pipelines, offering a unified solution for applications like smart grids, where aggregated features dynamically optimize energy distribution. Key innovations include the use of sparse attention for computational efficiency, residual connections for stable training, and Wasserstein GAN objectives for improved adversarial convergence. The proposed framework demonstrates significant potential to advance EIS by enabling robust, modality-agnostic representations while maintaining compatibility with existing systems.

References

J Gao, P Li, Z Chen & J Zhang (2020) A survey on deep learning for multimodal data fusion. Neural Computation.

X Wang, H Chen, S Tang, Z Wu, et al. (2024) Disentangled representation learning. IEEE Transactions on Neural Networks and Learning Systems.

YHH Tsai, S Bai, PP Liang, JZ Kolter, et al. (2019) Multimodal transformer for unaligned multimodal language sequences. In Proceedings of the Annual Meeting of the Association for Computational Linguistics.

A Farahani, S Voghoei, K Rasheed, et al. (2021) A brief review of domain adaptation. Artificial Intelligence and Machine Learning.

PH Le-Khac, G Healy & AF Smeaton (2020) Contrastive representation learning: A framework and review. Ieee Access.

X Wang, H Chen, S Tang, Z Wu, et al. (2024) Disentangled representation learning. IEEE Transactions on Neural Networks and Learning Systems.

D Yang, S Huang, H Kuang, Y Du, et al. (2022) Disentangled representation learning for multimodal emotion recognition. In ACM International Conference on Multimedia.

Z Wang, X Xu, J Wei, N Xie, Y Yang, et al. (2024) Semantics disentangling for cross-modal retrieval. IEEE Transactions on Neural Networks and Learning Systems.

H Hu, Y Xie, D Lian & K Han (2025) Modality-Disentangled Feature Extraction via Knowledge Distillation in Multimodal Recommendation Systems. IEEE Transactions on Neural Networks and Learning Systems.

J Shen, Y Qu, W Zhang & Y Yu (2018) Wasserstein distance guided representation learning for domain adaptation. In Proceedings of the AAAI Conference on Artificial Intelligence.

S Zhao, Z Yang, H Shi, X Feng, L Meng, et al. (2025) SDRS: Sentiment-Aware Disentangled Representation Shifting for Multimodal Sentiment Analysis. IEEE Transactions on Affective Computing.

Y Lu & J Lu (2020) A universal approximation theorem of deep neural networks for expressing probability distributions. In Advances in Neural Information Processing Systems.

Y LeCun, Y Bengio & G Hinton (2015) Deep learning. nature.

S Wang, Y Chen, Z He, X Yang, M Wang, et al. (2023) Disentangled representation learning with causality for unsupervised domain adaptation. In ACM International Conference on Multimedia.

S Mai, Y Zeng, S Zheng & H Hu (2022) Hybrid contrastive learning of tri-modal representation for multimodal sentiment analysis. IEEE Transactions on Affective Computing.

J Wang, T Zhu, J Gan, LL Chen, H Ning, et al. (2022) Sensor data augmentation by resampling in contrastive learning for human activity recognition. IEEE Sensors Journal.

A Vaswani, N Shazeer, N Parmar, et al. (2017) Attention is all you need. In Advances in Neural Information Processing Systems.

R Child, S Gray, A Radford & I Sutskever (2019) Generating long sequences with sparse transformers. arXiv preprint arXiv:1904.10509.

S Chung, J Lim, KJ Noh, G Kim & H Jeong (2019) Sensor data acquisition and multimodal sensor fusion for human activity recognition using deep learning. Sensors.

H Mo, X Hao, H Zheng, Z Liu, et al. (2016) Linguistic dynamic analysis of traffic flow based on social media—A case study. IEEE Transactions on Intelligent Transportation Systems.

B Rossi, S Chren, B Buhnova, et al. (2016) Anomaly detection in smart grid data: An experience report. In 2016 IEEE International Conference on Systems, Man, and Cybernetics.

Z Yi, Z Long, I Ounis, C Macdonald, et al. (2023) Large multi-modal encoders for recommendation. arXiv preprint arXiv:2310.20343.

F Feng, X Wang & R Li (2014) Cross-modal retrieval with correspondence autoencoder. In ACM International Conference on Multimedia.

N Carlini, M Nasr, et al. (2023) Are aligned neural networks adversarially aligned?. In Advances in Neural Information Processing Systems.

G Yin, Y Liu, T Liu, H Zhang, F Fang, C Tang, et al. (2024) Token-disentangling mutual transformer for multimodal emotion recognition. Engineering Applications of Artificial Intelligence.

J Devlin, MW Chang, K Lee, et al. (2019) Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of.

T Miyato, T Kataoka, M Koyama & Y Yoshida (2018) Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957.

M Pérez (2022) An Investigation of ADAM: A Stochastic Optimization Method. In International Conference on Machine Learning.

A Gretton, K Borgwardt, M Rasch, et al. (2006) A kernel method for the two-sample-problem. In Advances in Neural Information Processing Systems.

Y Zhang, P David & B Gong (2017) Curriculum domain adaptation for semantic segmentation of urban scenes. In Proceedings of the IEEE International Conference on Computer Vision.

I Achituve, H Maron & G Chechik (2021) Self-supervised learning for domain adaptation on point clouds. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision.

X Wang, H Chen, S Tang, Z Wu, et al. (2024) Disentangled representation learning. IEEE Transactions on Neural Networks and Learning Systems.

T Sachan, N Pinnaparaju, M Gupta, et al. (2021) SCATE: shared cross attention transformer encoders for multimodal fake news detection. In Proceedings of.

LR Soenksen, Y Ma, C Zeng, L Boussioux, et al. (2022) Integrated multimodal artificial intelligence framework for healthcare applications. Npj Digital Medicine.

A Piazzoni, J Cherian, M Slavik & J Dauwels (2020) Modeling perception errors towards robust decision making in autonomous vehicles. arXiv preprint arXiv:2001.11695.

PE Novac, G Boukli Hacene, A Pegatoquet, et al. (2021) Quantization and deployment of deep neural networks on microcontrollers. Sensors.

J Wang, J Lin & Z Wang (2017) Efficient hardware architectures for deep convolutional neural network. IEEE Transactions on Circuits and Systems I: Regular Papers.

D Pessach & E Shmueli (2022) A review on fairness in machine learning. ACM Computing Surveys (CSUR).

Y Zhao, Y Wang, Y Liu, X Cheng, et al. (2025) Fairness and diversity in recommender systems: a survey. ACM Transactions on Information Systems.

LE Celis & V Keswani (2019) Improved adversarial learning for fair classification. arXiv preprint arXiv:1901.10443.

M Yang, Y Li, Z Huang, Z Liu, P Hu, et al. (2021) Partially view-aligned representation learning with noise-robust contrastive loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021.

J Tian, W Cheung, N Glaser, YC Liu, et al. (2020) Uno: Uncertainty-aware noisy-or multimodal fusion for unanticipated input degradation. In 2020 IEEE International Conference on Robotics and Automation.

S Liu, F Le, S Chakraborty, et al. (2021) On exploring attention-based explanation for transformer models in text classification. In 2021 IEEE International Conference on Big Data.

B Kim, M Wattenberg, J Gilmer, C Cai, et al. (2018) Interpretability beyond feature attribution: Quantitative testing with concept activation vectors (tcav). In International Conference on Machine Learning.

T Teshima, I Sato & M Sugiyama (2020) Few-shot domain adaptation by causal mechanism transfer. In International Conference on Machine Learning.

YM Lin, Y Gao, MG Gong, SJ Zhang, YQ Zhang, et al. (2023) Federated learning on multimodal data: A comprehensive survey. Machine Intelligence Research.