Polycentric AI Governance: A Multi-Stakeholder Approach to Distributed Responsibility and Ethical Technology Management

Keywords:

Artificial intelligence, governance, distributed responsibility, stakeholder accountability, AI ethics frameworks, regulationAbstract

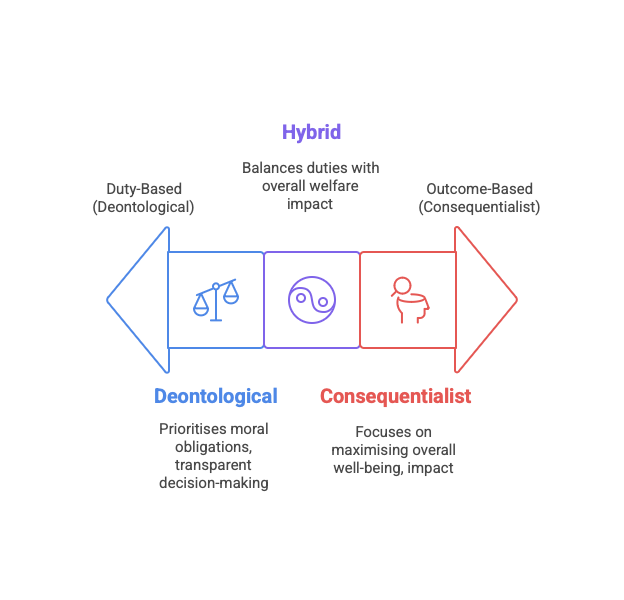

The swift evolution of artificial intelligence (AI) technologies has created unprecedented complexities in attributing responsibility within multifaceted technological systems concerning who is accountable for harm caused by AI technologies. This review analyses the chronological development of AI governance frameworks from 2018 to 2024 with special attention to newly emerging frameworks of distributed responsibility whereby developers, users, and regulators are legally bound under hybrid deontological-consequentialist governance systems. Through comprehensive analysis of policies, regulatory frameworks, case studies, and recent policy shifts, this paper argues that the inadequacy of the ‘single-point accountability’ model is increasingly becoming a defining feature of modern AI systems. This analysis illustrates an unparalleled global shift toward governance frameworks with distributed responsibility inspired by the EU AI Act 2024, UNESCO’s AI Ethics Recommendation 2021, and national governance frameworks emerging from Malaysia, Singapore, Australia, and Denmark. Applying stakeholder theory, duty of care, and Floridi’s information ethics, this review responds to the chief criticisms of distributed responsibility, such as concerns over diluted accountability and complexity of implementation. This narrative review advances the scholarly discourse on contemporary AI ethics by proposing a comprehensive policy framework that implements distributed responsibility through tiered-responsibility strategies, mandatory algorithmic impact assessments, and internationally coordinated oversight mechanisms. These findings offer balanced and practically implementable solutions to the competing desires of accountability and innovation within a networked technological landscape.

References

S. Russell and P. Norvig, Artificial Intelligence: A Modern Approach, 4th ed. Pearson, 2020.

L. Floridi et al., "AI4People—An ethical framework for a good AI society," Minds Mach., vol. 28, no. 4, pp. 689–707, 2018.

S. Barocas, M. Hardt, and A. Narayanan, Fairness and Machine Learning. MIT Press, 2023.

C. O'Neil, Weapons of Math Destruction. Crown, 2016.

A. F. Winfield and M. Jirotka, "Ethical governance is essential to building trust in robotics and AI systems," Philos. Trans. R. Soc. A, vol. 376, no. 2133, p. 20180085, 2018.

A. Jobin, M. Ienca, and E. Vayena, "The global landscape of AI ethics guidelines," Nat. Mach. Intell., vol. 1, no. 9, pp. 389–399, 2019.

D. Amodei et al., "Concrete Problems in AI Safety," arXiv preprint, arXiv:1606.06565, 2016.

F. A. Raso et al., Artificial Intelligence & Human Rights. Berkman Klein Center, 2018.

M. Coeckelbergh, "Artificial Intelligence, Responsibility Attribution, and a Relational Justification of Explainability," Sci. Eng. Ethics, vol. 26, no. 4, pp. 2051–2068, 2020.

J. B. Bullock et al., Eds., The Oxford Handbook of AI Governance. Oxford Univ. Press, 2024.

E. Hohma, C. Lütge, and A. Kiesel, "Investigating accountability for Artificial Intelligence through risk governance: A workshop-based exploratory study," PLoS One, vol. 18, no. 2, p. e0280845, 2023.

C. Cath et al., "Artificial Intelligence and the 'Good Society'," Sci. Eng. Ethics, vol. 24, no. 2, pp. 505–528, 2018.

I. D. Raji et al., "Closing the AI accountability gap: defining an end-to-end framework for internal algorithmic auditing," in Proc. FAT, 2020, pp. 33–44.

I. Kant, Groundwork for the Metaphysics of Morals. Cambridge Univ. Press, 1785/1997.

J. S. Mill, Utilitarianism. Parker, Son, and Bourn, 1863.

T. L. Beauchamp and J. F. Childress, Principles of Biomedical Ethics, 8th ed. Oxford Univ. Press, 2019.

R. E. Freeman, Strategic Management: A Stakeholder Approach. Cambridge Univ. Press, 1984.

R. K. Mitchell, B. R. Agle, and D. J. Wood, "Toward a theory of stakeholder identification and salience," Acad. Manage. Rev., vol. 22, no. 4, pp. 853–886, 1997.

ISO/IEC, ISO/IEC 38507:2022 – Governance implications of the use of artificial intelligence by organizations, International Organization for Standardization, 2022.

F. Santoni de Sio and G. Mecacci, "Four responsibility gaps with artificial intelligence: why they matter and how to address them," Philos. Technol., vol. 34, no. 4, pp. 1057–1084, 2021.

B. Latour, Reassembling the Social: An Introduction to Actor-Network-Theory. Oxford Univ. Press, 2005.

E. Ostrom, Polycentric Systems for Coping with Collective Action and Global Environmental Change, Glob. Environ. Change, vol. 20, no. 4, pp. 550–557, 2010.

A. G. Scherer and G. Palazzo, "The new political role of business in a globalised world," J. Manage. Stud., vol. 48, no. 4, pp. 899–931, 2011.

L. Floridi, The Ethics of Information. Oxford Univ. Press, 2013.

L. Floridi, "Translating the Digital Divide into Digital Inequality," Inf. Soc., vol. 18, no. 2, pp. 105–118, 2002.

D. Dobbs, P. T. Hayden, and E. M. Bublick, The Law of Torts, 2nd ed. West Academic, 2016.

R. Abbott, "The Reasonable Computer: Disrupting the Paradigm of Tort Liability," Geo. Wash. Law Rev., vol. 86, no. 1, pp. 1–45, 2018.

A. D. Selbst, "Negligence and AI's Human Users," BU Law Rev., vol. 100, no. 4, pp. 1315–1374, 2021.

Regulation (EU) 2016/679 (GDPR), Off. J. Eur. Union, vol. L119, pp. 1–88, 2016.

S. Wachter, B. Mittelstadt, and L. Floridi, "Why a right to explanation does not exist in GDPR," Int. Data Privacy Law, vol. 7, no. 2, pp. 76–99, 2017.

M. Veale, R. Binns, and L. Edwards, "Algorithms that remember: model inversion attacks and data protection law," Philos. Trans. R. Soc. A, vol. 376, no. 2133, p. 20180083, 2018.

L. Edwards and M. Veale, "Slave to the algorithm: why a right to explanation is probably not the remedy," Duke Law Tech. Rev., vol. 16, no. 1, pp. 18–84, 2017.

M. E. Kaminski, "The right to explanation, explained," Berkeley Tech. Law J., vol. 34, no. 1, pp. 189–218, 2019.

Regulation (EU) 2024/1689 (AI Act), Off. J. Eur. Union, vol. L1689, pp. 1–144, 2024.

M. Veale and F. Z. Borgesius, "Demystifying the Draft EU AI Act," Comput. Law Rev. Int., vol. 22, no. 4, pp. 97–112, 2021.

European Commission, Commission Establishes AI Office to Strengthen EU Leadership in Safe and Trustworthy Artificial Intelligence, May 29, 2024.

European Commission, AI Act Enters into Force, Aug. 1, 2024.

M. Ebers et al., "The European Commission's AI Act Proposal," J. Med. Internet Res., vol. 23, no. 7, p. e29596, 2021.

J. Laux, S. Wachter, and B. Mittelstadt, "Taming the few: Platform regulation and auditing risks," Comput. Law Secur. Rev., vol. 52, p. 105942, 2024.

I. Rahwan et al., "Machine behaviour," Nature, vol. 568, no. 7753, pp. 477–486, 2019.

G. Marcus, "Deep Learning: A Critical Appraisal," arXiv preprint, arXiv:1801.00631, 2018.

A. Matthias, "The responsibility gap: Ascribing responsibility for learning automata actions," Ethics Inf. Technol., vol. 6, no. 3, pp. 175–183, 2004.

J. Dastin, "Amazon scraps secret AI recruiting tool showing bias against women," Reuters, Oct. 10, 2018.

M. Raghavan, S. Barocas, J. Kleinberg, and K. Levy, "Mitigating bias in algorithmic hiring," in Proc. FAT, 2020, pp. 469–481.

A. Paulin, "Through the GDPR lens: automated individual decision-making approaches," Eur. Data Prot. Law Rev., vol. 4, no. 1, pp. 22–34, 2018.

R. Baeza-Yates, "Bias on the web," Commun. ACM, vol. 61, no. 6, pp. 54–61, 2018.

NTSB, Collision Between Vehicle Controlled by Automated Driving System and Pedestrian, Report NTSB/HAR-19/03, 2019.

J. Stilgoe, "Machine learning, social learning and self-driving car governance," Soc. Stud. Sci., vol. 48, no. 1, pp. 25–56, 2018.

J. K. Gurney, "Sue my car not me: Products liability and autonomous vehicle accidents," U. Ill. J. Law Tech. Policy, vol. 2013, no. 2, pp. 247–277, 2013.

J. Isaak and M. J. Hanna, "User data privacy: Facebook, Cambridge Analytica, and privacy protection," Computer, vol. 51, no. 8, pp. 56–59, 2018.

C. J. Bennett and D. Lyon, "Data-driven elections: implications for democratic societies," Internet Policy Rev., vol. 8, 2019.

S. Zuboff, The Age of Surveillance Capitalism. PublicAffairs, 2019.

T. Davenport and R. Kalakota, "The potential for artificial intelligence in healthcare," Future Healthc. J., vol. 6, no. 2, pp. 94–98, 2019.

D. S. W. Ting et al., "Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes," JAMA, vol. 318, no. 22, pp. 2211–2223, 2017.

L. R. Varshney, "Fundamental limits of data analytics in sociotechnical systems," Nat. Mach. Intell., vol. 1, no. 12, pp. 571–580, 2019.

A. Rajkomar et al., "Ensuring fairness in machine learning to advance health equity," Ann. Intern. Med., vol. 169, no. 12, pp. 866–872, 2018.

UNESCO, Recommendation on the Ethics of Artificial Intelligence, 2021.

UN High-Level Advisory Body on Artificial Intelligence, Governing AI for Humanity: Final Report, 2024.

Supreme Court of India, “Justice K. S. Puttaswamy (Retd.) v. Union of India,” Writ Petition (Civil) No. 494 of 2012, 2018.

Brazilian Chamber of Deputies, “Projeto de Lei nº 21/2020: Regulatory Framework for Artificial Intelligence in Brazil,” 2024 update.

IEEE Standards Association, IEEE 7000-2021 - IEEE Standard Model Process for Addressing Ethical Concerns During System Design, 2021.

ISO/IEC, ISO/IEC 42001:2023 Information technology — Artificial intelligence — Management system, 2023.

ISO/IEC, ISO/IEC 38507:2022 Information technology — Governance of IT — Governance implications of the use of artificial intelligence by organizations, 2022.

Malaysia National AI Office, National AI Governance Framework, 2024.

Singapore Personal Data Protection Commission, Model AI Governance Framework for Generative AI, 2024.

Singapore AI Verify Foundation, Multi-stakeholder AI Governance Report, 2024.

Australian Government, Voluntary AI Safety Standard, 2024.

Danish Government, AI Competence Pact, 2024.

M. Bovens, "Analysing and assessing accountability: a conceptual framework," Eur. Law J., vol. 13, no. 4, pp. 447–468, 2007.

H. Nissenbaum, "Accountability in a computerized society," Sci. Eng. Ethics, vol. 2, no. 1, pp. 25–42, 1996.

I. Young, "Responsibility and global justice: A social connection model," Soc. Philos. Policy, vol. 23, no. 1, pp. 102–130, 2006.

A. Crane and D. Matten, Business Ethics: Managing Corporate Citizenship and Sustainability in the Age of Globalization, 4th ed. Oxford Univ. Press, 2016.

R. Phillips, Stakeholder Theory and Organizational Ethics. Berrett-Koehler Publishers, 2003.

A. Wicks, D. Gilbert, and R. Freeman, "A feminist reinterpretation of the stakeholder concept," Bus. Ethics Q., vol. 4, no. 4, pp. 475–497, 1994.

J. Dryzek, Deliberative Democracy and Beyond. Oxford Univ. Press, 2000.

OECD, AI and Competition, 2021.

C. Cihon, "Standards for AI governance: international standards to enable global coordination in AI research and development," Future Humanity Institute, 2019.

T. Arnold et al., "Cooperative AI: machines must learn to find common ground," Nature, vol. 593, no. 7857, pp. 33–36, 2021.

M. Scherer, "Regulating artificial intelligence systems: risks, challenges, competencies, and strategies," Harv. J. Law Technol., vol. 29, no. 2, pp. 353–400, 2016.

R. Calo, "Robotics and the lessons of cyberlaw," Calif. Law Rev., vol. 103, no. 3, pp. 513–563, 2015.

J. Morley et al., "The ethics of AI in health care: a mapping review," Soc. Sci. Med., vol. 260, p. 113172, 2020.

M. Whittaker et al., AI Now Report 2018. AI Now Institute, 2018.

R. Binns, "Algorithmic accountability and public reason," Philos. Compass, vol. 13, no. 11, p. e12543, 2018.

H. Richardson, Democratic Autonomy. Oxford Univ. Press, 2002.

J. Dafoe, "AI governance: a research agenda," Governance of AI Program, Future of Humanity Institute, 2018.