EchoKG: A Dynamic user Preference Knowledge Graph In-vehicle Dialogue System Based on Ebbinghaus Forgetting Curve

Keywords:

Large Language Model, Dialogue System, Knowledge Graph, Forgetting CurveAbstract

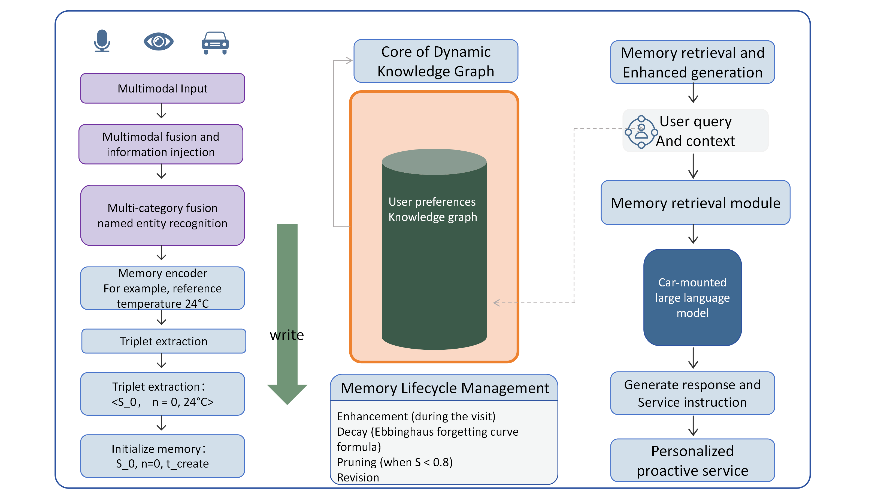

With the increasing integration of large language models (LLMs) into intelligent vehicle cockpits, achieving efficient, accurate, and personalized interactions with long-term memory capabilities has become a key challenge. Existing vector retrieval methods suffer from context inflation issues, while static knowledge graphs struggle to capture the time-varying nature of user preferences. This paper proposes the EchoKG framework, which for the first time mathematically models the Ebbinghaus forgetting curve as a dynamic weight mechanism for knowledge graph nodes, enabling the natural decay and reinforcement of user preferences. By introducing memory strength S and last access time, EchoKG dynamically manages the lifecycle of memories. Experimental results on the fully open-source dataset EchoCar-Public demonstrate that compared to MemoryBank, static knowledge graphs, and GPT-4o Memory, EchoKG reduces the average context length by 32%, increases the F1 score for intent recognition by 5.1%, and improves the personalized consistency score by 0.68 points, while maintaining a response latency within 800ms.

References

Murali, P. K., Kaboli, M., & Dahiya, R. (2022). Intelligent in‐vehicle interaction technologies. Advanced Intelligent Systems, 4(2), 2100122.

Zhong, W., Guo, L., Gao, Q., Ye, H., & Wang, Y. (2024, March). Memorybank: Enhancing large language models with long-term memory. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 38, No. 17, pp. 19724-19731).

Memory, O. K. C. Memory: A Contribution to Experimental Psychology.

Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., ... & Kiela, D. (2020). Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in neural information processing systems, 33, 9459-9474.

Packer, C., Fang, V., Patil, S., Lin, K., Wooders, S., & Gonzalez, J. (2023). MemGPT: Towards LLMs as Operating Systems.

Liu, W., Zhou, P., Zhao, Z., Wang, Z., Ju, Q., Deng, H., & Wang, P. (2020, April). K-bert: Enabling language representation with knowledge graph. In Proceedings of the AAAI conference on artificial intelligence (Vol. 34, No. 03, pp. 2901-2908).

Settles, B., & Meeder, B. (2016, August). A trainable spaced repetition model for language learning. In Proceedings of the 54th annual meeting of the association for computational linguistics (volume 1: long papers) (pp. 1848-1858).

Park, J. S., O'Brien, J., Cai, C. J., Morris, M. R., Liang, P., & Bernstein, M. S. (2023, October). Generative agents: Interactive simulacra of human behavior. In Proceedings of the 36th annual acm symposium on user interface software and technology (pp. 1-22).

Trivedi, R., Dai, H., Wang, Y., & Song, L. (2017, July). Know-evolve: Deep temporal reasoning for dynamic knowledge graphs. In international conference on machine learning (pp. 3462-3471). PMLR.

Bai, J., Bai, S., Chu, Y., Cui, Z., Dang, K., Deng, X., ... & Zhu, T. (2023). Qwen technical report. arXiv preprint arXiv:2309.16609.

Budzianowski, P., Wen, T. H., Tseng, B. H., Casanueva, I., Ultes, S., Ramadan, O., & Gašić, M. (2018). Multiwoz--a large-scale multi-domain wizard-of-oz dataset for task-oriented dialogue modelling. arXiv preprint arXiv:1810.00278.

Rastogi, A., Zang, X., Sunkara, S., Gupta, R., & Khaitan, P. (2020, April). Towards scalable multi-domain conversational agents: The schema-guided dialogue dataset. In Proceedings of the AAAI conference on artificial intelligence (Vol. 34, No. 05, pp. 8689-8696).

Eric, M., Krishnan, L., Charette, F., & Manning, C. D. (2017, August). Key-value retrieval networks for task-oriented dialogue. In Proceedings of the 18th annual SIGdial meeting on discourse and dialogue (pp. 37-49).

Papineni, K., Roukos, S., Ward, T., & Zhu, W. J. (2002, July). Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics (pp. 311-318).

Edge, D., Trinh, H., Cheng, N., Bradley, J., Chao, A., Mody, A., ... & Larson, J. (2024). From local to global: A graph rag approach to query-focused summarization. arXiv preprint arXiv:2404.16130.